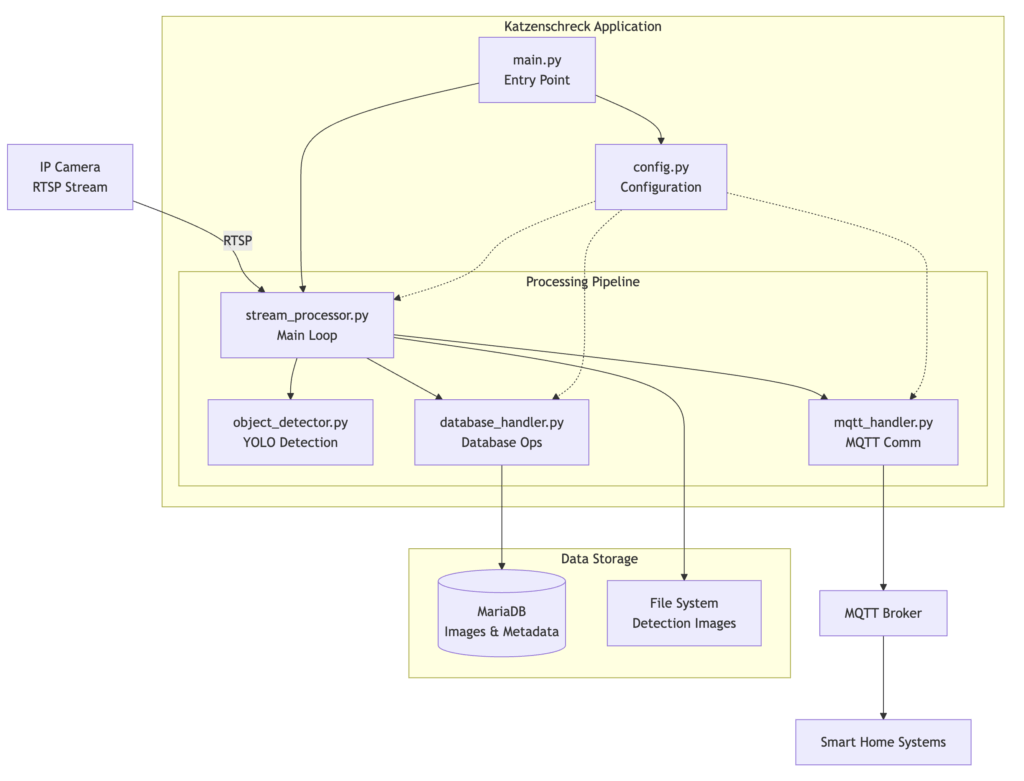

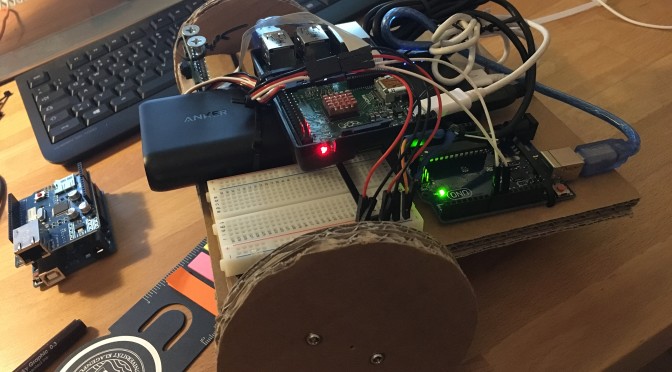

When I first built FCATS Katzenschreck, it was a fun proof of concept — a headless, AI-powered cat detection system running on small edge devices.

Now, it’s faster. Much faster.

🧠 From Raspberry Pi to Jetson Xavier NX

I’ve ported the entire inference script into a Docker container running on an NVIDIA Jetson Xavier NX Developer Board. The performance jump is incredible:

| Device | Model | Inference Time |

| Raspberry Pi 3 | YOLOv11x | ~50 seconds per frame |

| Raspberry Pi 4 | YOLOv11x | ~18 seconds per frame |

| Jetson Xavier NX | YOLOv11x | 188 ms per frame |

That’s not just faster — it’s real-time.

The Jetson can even host multiple inference containers simultaneously, so scaling across multiple cameras is finally practical.

🐈 Training with Real-World Data

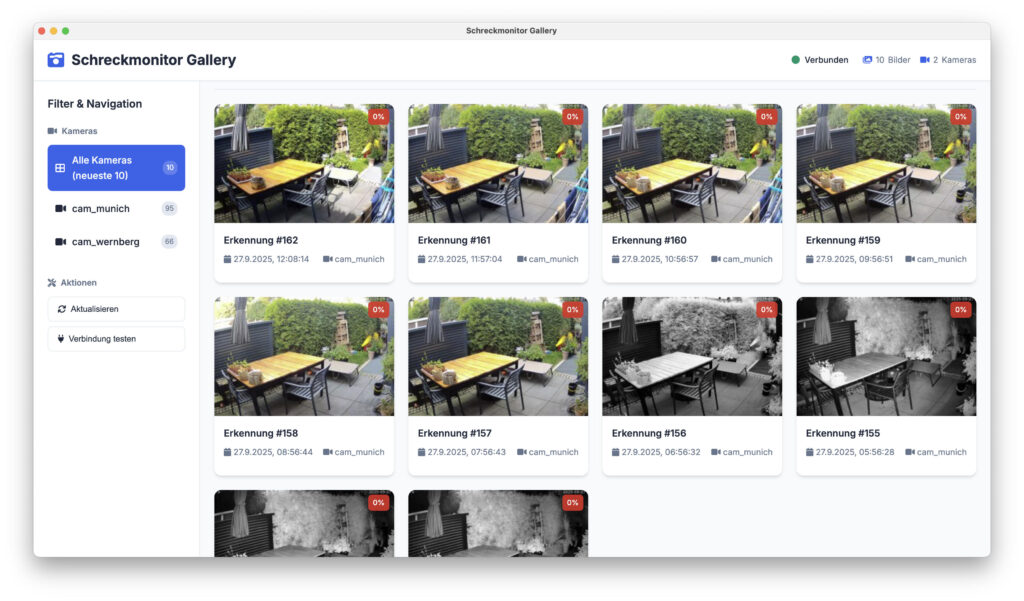

Over the last two months, Katzenschreck has been quietly watching (24/7) a specific outdoor spot — collecting data on feline intruders.

I used this footage (~4.5 GB of JPEG frames) to fine-tune my model:

- Re-labeled the dataset for precision.

- Added data augmentation (lighting, perspective, random occlusion).

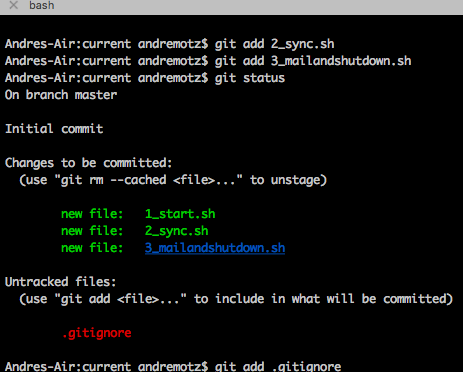

- Retrained using YOLOv11x with my own MLOps pipeline for regular re-training.

The result? The system now detects cats more reliably, even in poor lighting or partial occlusions — and false positives dropped noticeably.

🧰 What’s Next

Next up, I’ll publish a detailed look at my improved model performance metrics — including confidence scores, precision-recall curves, and a few live comparison demos.

Stay tuned — and if you’ve ever wanted to see what happens when DevOps meets wildlife control, this might be the most overengineered example yet 😄

Update, 2025-12-23: Attached you can find my trained model that improved my accuracy by +10%: https://www.andremotz.com/wp-content/uploads/2025/12/best.pt_.zip